Apple has blocked some developers from using Sign in with Apple, after a report discovered popular sign-in tools have been used by websites providing harmful AI image undressing services.

While Apple Intelligence and other generative AI efforts often offer legitimate and ethical ways to change an image for users, some go the opposite way. The rise of deepfakes has led to a cottage industry of sites that lets users submit photographs and have the AI digitally remove the subject’s clothes.

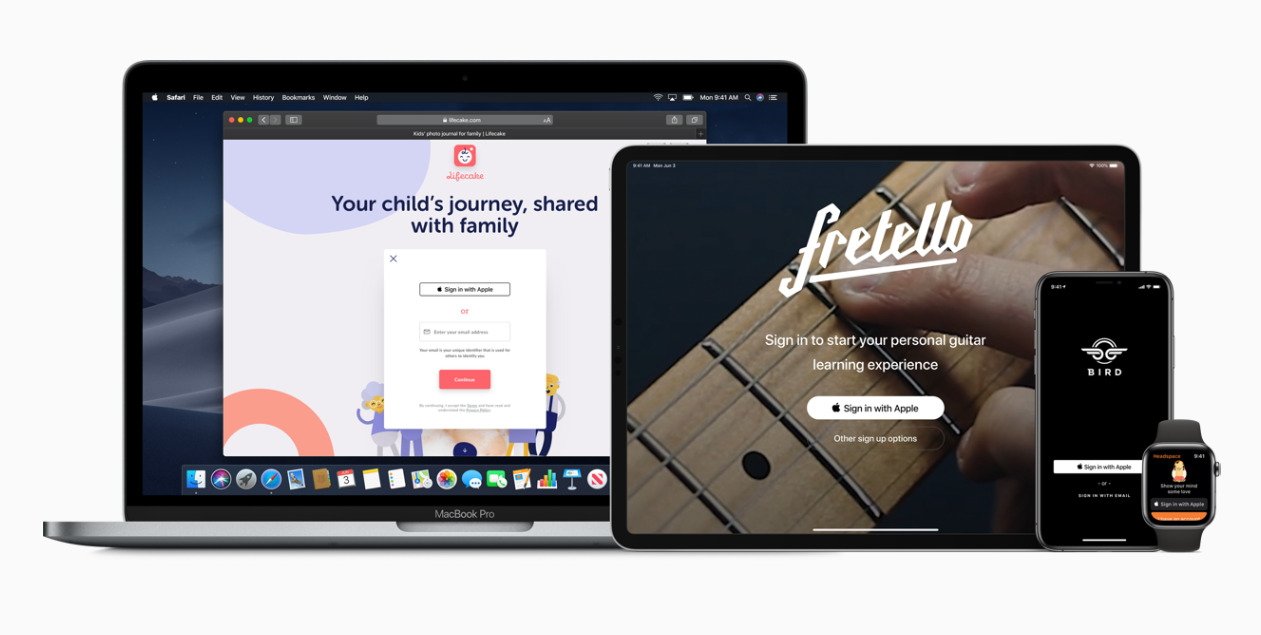

The sites, referred to as undress or “nudify” sites, can enable abuse, and are a problem for tech companies. Wired reports that sign-on infrastructure from tech giants are being used on the sites, including Sign in with Apple.

Of 16 sites seen by the report, Sign in with Apple was used on six. Google’s sign-in API was used on all 16, Discord’s on 13, and Patreon and Line used on two.

Since being alerted to the issue, both Apple and Discord said they had removed API access to the developers responsible. Google said it would do the same in cases where its terms were violated, while Patreon claims it prohibits accounts from allowing explicit imagery to be produced.

The use of the authentication systems provide a visible label of supposed credibility for the sites, despite the ownership and operation of the sites being extremely opaque. Few details about the operators of the sites are known.

While action against the log-in systems of the sites won’t close them down, the sites will most likely be subject to more action in the future.

Many of the sites touted the use of Mastercard and Visa for payment systems, using their logos. In the case of Mastercard, a spokesperson said that the purchases of “nonconsensual deepfake content are not allowed on our network,” and that it is prepared to take further action.